Facial recognition is not just useful for unlocking your iPhone.

Hong Kong protesters of the proposed extradition bill have more to worry about than the police. Marchers must deal with the traditional attacks on their attempts at self-governance, from police blockades to physical assaults, but are also facing issues with facial recognition technology, which has started to show up in police forces and elsewhere even in the states.

Common in protests ranging anywhere from Hong Kong to the shores of America is the use of masks. These are used both as a practical way of fighting police use of tear gas and other dispersal methods, but also serve to hide the identity of the individual. In a step further, and a telling reality of our modern age, Hong Kong protestors have utilized facial projectors in efforts to confuse AI facial recognition software, often deployed in cities where citizens may least expect it.

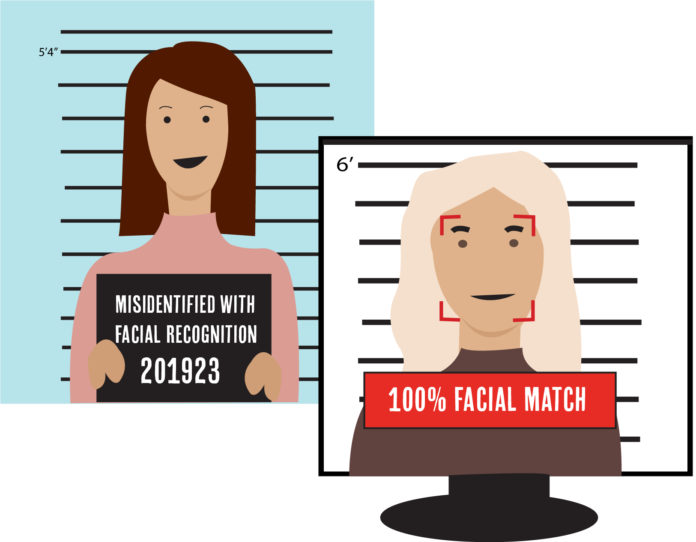

American police have begun to roll out similar facial recognition technologies, though in different capacities, for different reasons and without the encompassing social credit system of China. Programs such as “Rekognition” from Amazon match pictures of suspects and victims alike with mugshots and drivers licenses in order to help solve legal cases.

Even if not used aggressively against protestors as seen in Hong Kong, facial recognition technology use in America raise ethical concerns. “Rekognition,” available for public use, have been found to “find” criminal faces in the photos of congressmen, according to an article from the ACLU. These 28 matches were all false, and disproportionately found matches in non-white congressmen. With all the power available to tech companies, these false matches can cause problems in a society that is largely becoming dominated by new technologies.

Matches like this, which can misidentify not only criminal faces, but also can have mistakes in identifying darker-skinned people and women. Nearly 40 percent of false matches in congress were people of color.

This is dangerous for use in police work. At least currently, the programs seem to be far too biased and flawed for the public to trust them, especially with the sometimes spotty reputation of American police forces and law enforcement in racial issues.

Those in power should be wary of using such methods. Those who may be affected negatively, by false identification or by abuse of power, could become distrustful of authority, and may fight against the new technology, even in areas where it may help to reduce crime or solve other social problems.

The public must always be able to trust authorities, whether they be governmental or technological. The advent of new technologies brings a lot to the table in offering increased security and protection from threats. However, new methods of law enforcement and protest dispersal should be seen with caution in this modern era, especially when it comes to facial recognition software, which has thus far proven to be faulty at best and incredibly invasive at worst.